LING 539

Statistical Natural Language Processing

This 3-credit course introduces the key concepts underlying statistical natural language processing, a domain which includes “traditional” statistical approaches like Bayesian classifiers as well as more modern neural-based approaches whose design is nonetheless statistically-based. Students will learn a variety of techniques for the computational modeling of natural language, including: n-gram models, smoothing, Hidden Markov models, Bayesian inference, expectation maximization, the Viterbi algorithm, the Inside-Outside algorithm for probabilistic context-free grammars, and higher-order language models. This course complements the introductory course in symbolic and analytic computational approaches to language, LING/CSC/PSY 538 Computational Linguistics.

Given the performance improvements (and massive hype!) around transformer-based LLMs since 2022, many students are eager to learn how to work with transformer-based LLMs like OpenAI’s GPT models, Google’s Gemini, Anthropic’s Claude, and many others. However, rather than rush into what it takes simply to use such models, our graduate program gives students a solid foundation in statistical approaches in order to understand more deeply what problems they’re designed to solve, how they address those problems, and what their limitations are.

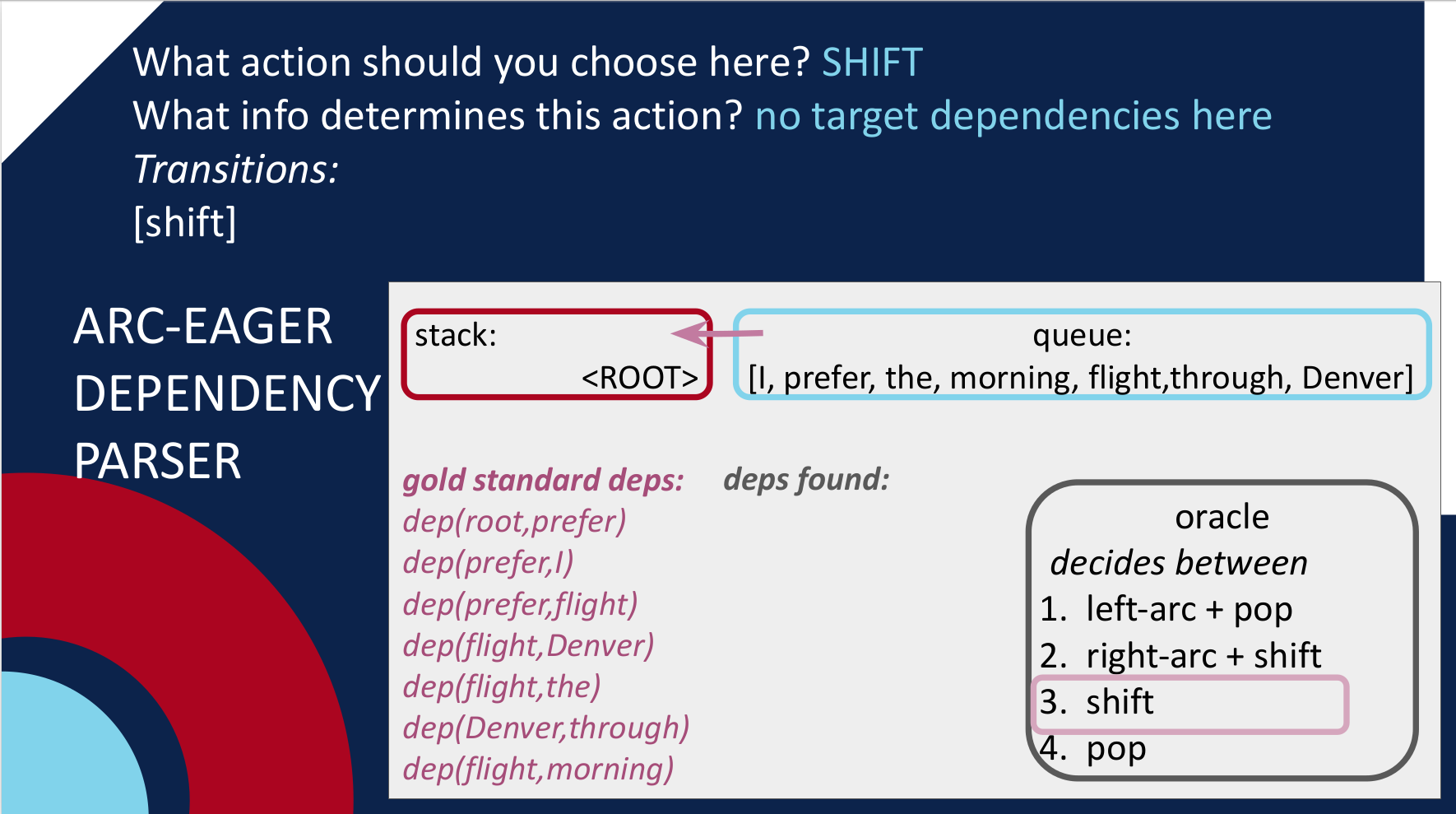

This is the first course in a two-course series (LING 539 and LING 582) which brings students from a basic introduction to statistical approaches up to working with LLMs. In this first course, students come to understand a range of foundational natural language processing (NLP) topics, such as the special properties of language as data, text preprocessing and why it is needed, and the importance of choosing effective representations for language-based objects. We introduce basic concepts in machine learning and text classification algorithms, such as representing objects using features, and selecting effective features for the NLP task at hand, as students learn the concepts underlying classification with naive Bayes and logistic regression. Students learn the utility of a variety of word representations (document-term vectors and the “bag of words” approach, up to static embeddings), then apply these principles to realistic NLP tasks like sequence labeling (part of speech tagging, shallow parsing/chunking, etc.) and structured prediction (chart-based parsing, transition-based dependency parsing).

Students will practice applying new concepts (including programming) first in low-stakes TopHat review activities, and will then demonstrate their ability to use these concepts, as well as practicing working with git and GitHub, in a series of realistic programming assignments administered through GitHub Classroom. Students enrolled in graduate-level sections will also participate in a private class competition on Kaggle, where they can apply an approach of their choice to an open-ended text classification task.

Most recent syllabus

All past syllabi for this course

- Spring 2026 7W2 (online)(in prep)

- Spring 2026 15W (in person)

- Spring 2024 (in person)

- Spring 2023 (online)